After migrating quite a few legacy applications from unmanaged EC2 instances into Elastic Beanstalk recently I noticed a few issues deploying code to the environment while under load.

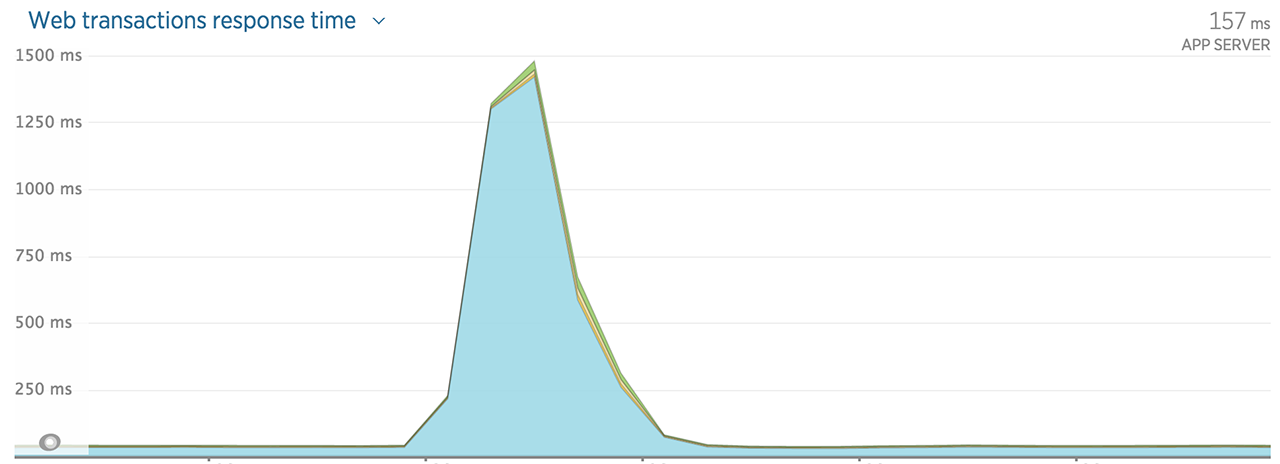

A lot of investigation later I discovered that at the point Elastic Beanstalk was deploying the code, Apache started spinning up a lot more child processes and the instances were maxing out the CPU. Once the instances were added back into the elb it took a minutes for the CPU usage to drop to a level where they could serve a decent amount of traffic again, which is why the application response time went sky high.

One way Amazon suggests to deploy new application versions is outlined in this guide: Deploying Versions with Zero Downtime. Something I was concerned about with using this approach is the new instances would not be ‘warmed up’ enough to take all of the traffic immediately.

I wanted to find out exactly what was causing the problem, and it seemed to lie in the way Elastic Beanstalk switched code from /var/app/ondeck/ to /var/app/current/. When the deployment happened it issues a mv command on the /var/app/current/ directory to back it up before moving /var/app/ondeck/ into it’s place. After some further research a solution was to do something undocumented (and probably not really recommended), replace the deploy script: /opt/elasticbeanstalk/hooks/appdeploy/enact/01_flip.sh, which is what ElasticBeanstalk uses to run the deployment commands.

Warning, this solution is not a documented and definitely not a supported Amazon AWS solution, so use with care.

files:

"/opt/elasticbeanstalk/hooks/appdeploy/enact/01_flip.sh":

mode: "000755"

owner: root

group: root

encoding: plain

content: |

#!/usr/bin/env bash

set -xe

EB_APP_STAGING_DIR=$(/opt/elasticbeanstalk/bin/get-config container -k app_staging_dir)

EB_APP_DEPLOY_DIR=$(/opt/elasticbeanstalk/bin/get-config container -k app_deploy_dir)

if [ -d $EB_APP_DEPLOY_DIR ]; then

cp -r $EB_APP_DEPLOY_DIR $EB_APP_DEPLOY_DIR.old

fi

rsync -r --delete $EB_APP_STAGING_DIR/* $EB_APP_DEPLOY_DIR/

nohup rm -rf $EB_APP_DEPLOY_DIR.old >/dev/null 2>&1 &

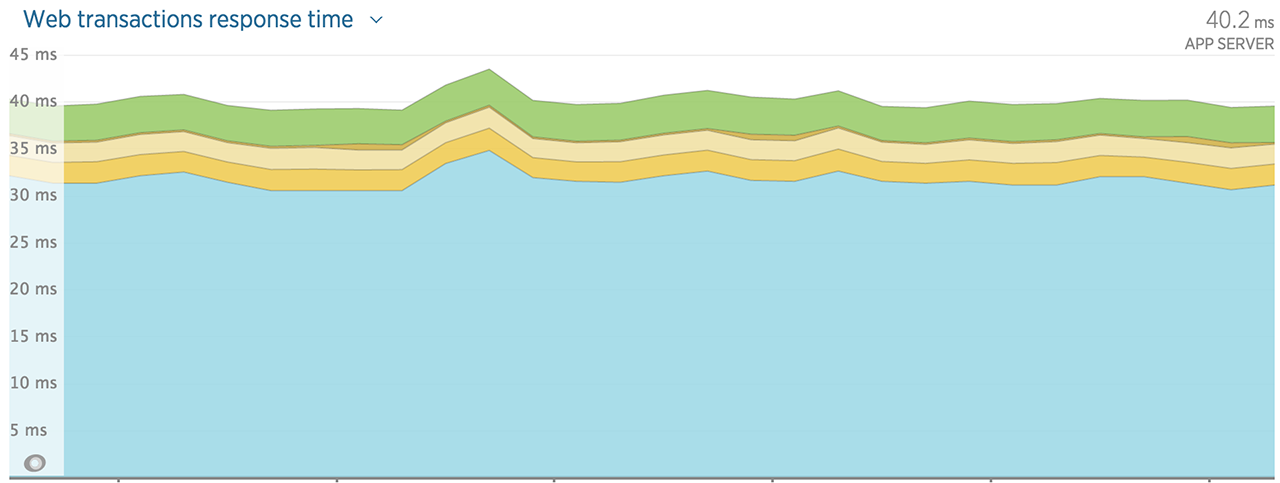

After making the change and running a deployment to test what happens with roughly 10k requests per second I was unable to see a repeat of the issue I was seeing before, so it seems that this fix did make a difference.

In general rsync is probably better suited for this task as it will create a copy of the files rather than flipping them at the filesystem level, which I can only assume may have been the original issue if files were being read at that moment.

Of course, your millage may vary, in this specific case this approach worked, it may not be the same in your case. Likewise, this is a solution to a problem at this moment in time, future changes to Elastic Beanstalk may negate the need for this or fix something unrelated to this post that has the same ultimate effect. I would suggest if you decide to try this that you test it thoroughly before deploying it into a production environment and keep in mind that this may cause issues further down the line if AWS decide to change how things work.

For my usecase though it works and it means we can use Elastic Beanstalk without the user impact that it would have had otherwise.

Posted in: Development